Ultralytics YOLOv8.0.220 🚀 Python-3.10.12 torch-2.1.0+cu118 CUDA:0 (Tesla T4, 15102MiB)

engine/trainer: task=detect, mode=train, model=yolov8s.pt, data=/content/Raccoon-38/data.yaml, epochs=10, patience=50, batch=16, imgsz=640, save=True, save_period=-1, cache=False, device=None, workers=8, project=None, name=train2, exist_ok=False, pretrained=True, optimizer=auto, verbose=True, seed=0, deterministic=True, single_cls=False, rect=False, cos_lr=False, close_mosaic=10, resume=False, amp=True, fraction=1.0, profile=False, freeze=None, overlap_mask=True, mask_ratio=4, dropout=0.0, val=True, split=val, save_json=False, save_hybrid=False, conf=None, iou=0.7, max_det=300, half=False, dnn=False, plots=True, source=None, vid_stride=1, stream_buffer=False, visualize=False, augment=False, agnostic_nms=False, classes=None, retina_masks=False, show=False, save_frames=False, save_txt=False, save_conf=False, save_crop=False, show_labels=True, show_conf=True, show_boxes=True, line_width=None, format=torchscript, keras=False, optimize=False, int8=False, dynamic=False, simplify=False, opset=None, workspace=4, nms=False, lr0=0.01, lrf=0.01, momentum=0.937, weight_decay=0.0005, warmup_epochs=3.0, warmup_momentum=0.8, warmup_bias_lr=0.1, box=7.5, cls=0.5, dfl=1.5, pose=12.0, kobj=1.0, label_smoothing=0.0, nbs=64, hsv_h=0.015, hsv_s=0.7, hsv_v=0.4, degrees=0.0, translate=0.1, scale=0.5, shear=0.0, perspective=0.0, flipud=0.0, fliplr=0.5, mosaic=1.0, mixup=0.0, copy_paste=0.0, cfg=None, tracker=botsort.yaml, save_dir=runs/detect/train2

Downloading https://ultralytics.com/assets/Arial.ttf to '/root/.config/Ultralytics/Arial.ttf'...

100% 755k/755k [00:00<00:00, 12.3MB/s]

2023-11-29 15:19:03.571951: E tensorflow/compiler/xla/stream_executor/cuda/cuda_dnn.cc:9342] Unable to register cuDNN factory: Attempting to register factory for plugin cuDNN when one has already been registered

2023-11-29 15:19:03.572023: E tensorflow/compiler/xla/stream_executor/cuda/cuda_fft.cc:609] Unable to register cuFFT factory: Attempting to register factory for plugin cuFFT when one has already been registered

2023-11-29 15:19:03.572079: E tensorflow/compiler/xla/stream_executor/cuda/cuda_blas.cc:1518] Unable to register cuBLAS factory: Attempting to register factory for plugin cuBLAS when one has already been registered

Overriding model.yaml nc=80 with nc=1

from n params module arguments

0 -1 1 928 ultralytics.nn.modules.conv.Conv [3, 32, 3, 2]

1 -1 1 18560 ultralytics.nn.modules.conv.Conv [32, 64, 3, 2]

2 -1 1 29056 ultralytics.nn.modules.block.C2f [64, 64, 1, True]

3 -1 1 73984 ultralytics.nn.modules.conv.Conv [64, 128, 3, 2]

4 -1 2 197632 ultralytics.nn.modules.block.C2f [128, 128, 2, True]

5 -1 1 295424 ultralytics.nn.modules.conv.Conv [128, 256, 3, 2]

6 -1 2 788480 ultralytics.nn.modules.block.C2f [256, 256, 2, True]

7 -1 1 1180672 ultralytics.nn.modules.conv.Conv [256, 512, 3, 2]

8 -1 1 1838080 ultralytics.nn.modules.block.C2f [512, 512, 1, True]

9 -1 1 656896 ultralytics.nn.modules.block.SPPF [512, 512, 5]

10 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest']

11 [-1, 6] 1 0 ultralytics.nn.modules.conv.Concat [1]

12 -1 1 591360 ultralytics.nn.modules.block.C2f [768, 256, 1]

13 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest']

14 [-1, 4] 1 0 ultralytics.nn.modules.conv.Concat [1]

15 -1 1 148224 ultralytics.nn.modules.block.C2f [384, 128, 1]

16 -1 1 147712 ultralytics.nn.modules.conv.Conv [128, 128, 3, 2]

17 [-1, 12] 1 0 ultralytics.nn.modules.conv.Concat [1]

18 -1 1 493056 ultralytics.nn.modules.block.C2f [384, 256, 1]

19 -1 1 590336 ultralytics.nn.modules.conv.Conv [256, 256, 3, 2]

20 [-1, 9] 1 0 ultralytics.nn.modules.conv.Concat [1]

21 -1 1 1969152 ultralytics.nn.modules.block.C2f [768, 512, 1]

22 [15, 18, 21] 1 2116435 ultralytics.nn.modules.head.Detect [1, [128, 256, 512]]

Model summary: 225 layers, 11135987 parameters, 11135971 gradients, 28.6 GFLOPs

Transferred 349/355 items from pretrained weights

TensorBoard: Start with 'tensorboard --logdir runs/detect/train2', view at http://localhost:6006/

Freezing layer 'model.22.dfl.conv.weight'

AMP: running Automatic Mixed Precision (AMP) checks with YOLOv8n...

AMP: checks passed ✅

train: Scanning /content/Raccoon-38/train/labels... 150 images, 0 backgrounds, 0 corrupt: 100% 150/150 [00:00<00:00, 1576.78it/s]

train: New cache created: /content/Raccoon-38/train/labels.cache

albumentations: Blur(p=0.01, blur_limit=(3, 7)), MedianBlur(p=0.01, blur_limit=(3, 7)), ToGray(p=0.01), CLAHE(p=0.01, clip_limit=(1, 4.0), tile_grid_size=(8, 8))

val: Scanning /content/Raccoon-38/valid/labels... 29 images, 0 backgrounds, 0 corrupt: 100% 29/29 [00:00<00:00, 919.25it/s]

val: New cache created: /content/Raccoon-38/valid/labels.cache

Plotting labels to runs/detect/train2/labels.jpg...

optimizer: 'optimizer=auto' found, ignoring 'lr0=0.01' and 'momentum=0.937' and determining best 'optimizer', 'lr0' and 'momentum' automatically...

optimizer: AdamW(lr=0.002, momentum=0.9) with parameter groups 57 weight(decay=0.0), 64 weight(decay=0.0005), 63 bias(decay=0.0)

Image sizes 640 train, 640 val

Using 2 dataloader workers

Logging results to runs/detect/train2

Starting training for 10 epochs...

Closing dataloader mosaic

albumentations: Blur(p=0.01, blur_limit=(3, 7)), MedianBlur(p=0.01, blur_limit=(3, 7)), ToGray(p=0.01), CLAHE(p=0.01, clip_limit=(1, 4.0), tile_grid_size=(8, 8))

Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size

1/10 3.93G 1.783 7.084 2.412 6 640: 100% 10/10 [00:07<00:00, 1.31it/s]

Class Images Instances Box(P R mAP50 mAP50-95): 100% 1/1 [00:01<00:00, 1.84s/it]

all 29 29 0.666 0.655 0.717 0.279

Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size

2/10 3.99G 1.371 2.883 2.013 8 640: 100% 10/10 [00:02<00:00, 3.42it/s]

Class Images Instances Box(P R mAP50 mAP50-95): 100% 1/1 [00:00<00:00, 2.12it/s]

all 29 29 0.603 0.828 0.721 0.277

Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size

3/10 4.01G 1.178 1.829 1.895 6 640: 100% 10/10 [00:02<00:00, 3.72it/s]

Class Images Instances Box(P R mAP50 mAP50-95): 100% 1/1 [00:00<00:00, 2.28it/s]

all 29 29 0.813 0.759 0.807 0.39

Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size

4/10 4.01G 1.26 1.564 1.923 6 640: 100% 10/10 [00:03<00:00, 2.74it/s]

Class Images Instances Box(P R mAP50 mAP50-95): 100% 1/1 [00:00<00:00, 1.17it/s]

all 29 29 0.744 0.703 0.757 0.348

Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size

5/10 4G 1.155 1.257 1.845 7 640: 100% 10/10 [00:02<00:00, 3.72it/s]

Class Images Instances Box(P R mAP50 mAP50-95): 100% 1/1 [00:00<00:00, 2.27it/s]

all 29 29 0.632 0.862 0.807 0.364

Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size

6/10 4.01G 1.269 1.26 1.864 7 640: 100% 10/10 [00:02<00:00, 3.79it/s]

Class Images Instances Box(P R mAP50 mAP50-95): 100% 1/1 [00:00<00:00, 2.39it/s]

all 29 29 0.893 0.862 0.928 0.386

Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size

7/10 4G 1.111 1.15 1.714 6 640: 100% 10/10 [00:03<00:00, 3.06it/s]

Class Images Instances Box(P R mAP50 mAP50-95): 100% 1/1 [00:00<00:00, 1.12it/s]

all 29 29 0.878 0.828 0.882 0.391

Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size

8/10 4.01G 1.183 1.044 1.792 6 640: 100% 10/10 [00:02<00:00, 3.58it/s]

Class Images Instances Box(P R mAP50 mAP50-95): 100% 1/1 [00:00<00:00, 2.09it/s]

all 29 29 0.848 0.966 0.955 0.491

Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size

9/10 4G 1.08 1.052 1.692 6 640: 100% 10/10 [00:02<00:00, 3.78it/s]

Class Images Instances Box(P R mAP50 mAP50-95): 100% 1/1 [00:00<00:00, 2.24it/s]

all 29 29 0.917 0.966 0.958 0.545

Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size

10/10 4G 0.962 0.8895 1.587 6 640: 100% 10/10 [00:02<00:00, 3.40it/s]

Class Images Instances Box(P R mAP50 mAP50-95): 100% 1/1 [00:00<00:00, 1.23it/s]

all 29 29 0.893 0.86 0.933 0.555

10 epochs completed in 0.016 hours.

Optimizer stripped from runs/detect/train2/weights/last.pt, 22.5MB

Optimizer stripped from runs/detect/train2/weights/best.pt, 22.5MB

Validating runs/detect/train2/weights/best.pt...

Ultralytics YOLOv8.0.220 🚀 Python-3.10.12 torch-2.1.0+cu118 CUDA:0 (Tesla T4, 15102MiB)

Model summary (fused): 168 layers, 11125971 parameters, 0 gradients, 28.4 GFLOPs

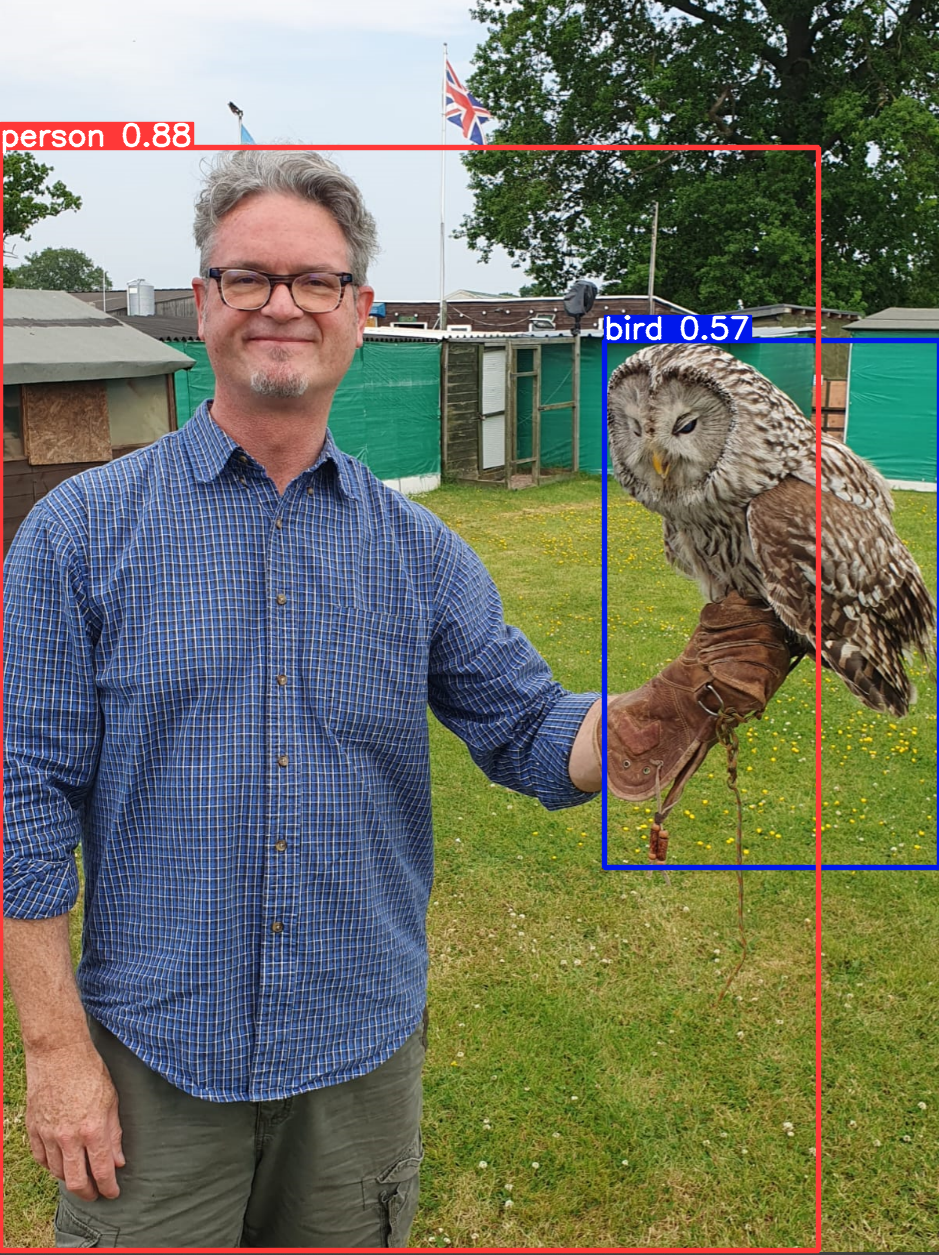

Class Images Instances Box(P R mAP50 mAP50-95): 100% 1/1 [00:00<00:00, 2.54it/s]

all 29 29 0.893 0.86 0.933 0.555

Speed: 0.2ms preprocess, 6.0ms inference, 0.0ms loss, 1.8ms postprocess per image

Results saved to runs/detect/train2

💡 Learn more at https://docs.ultralytics.com/modes/train